-

-  -

-  -

-  -

-  -

-  -

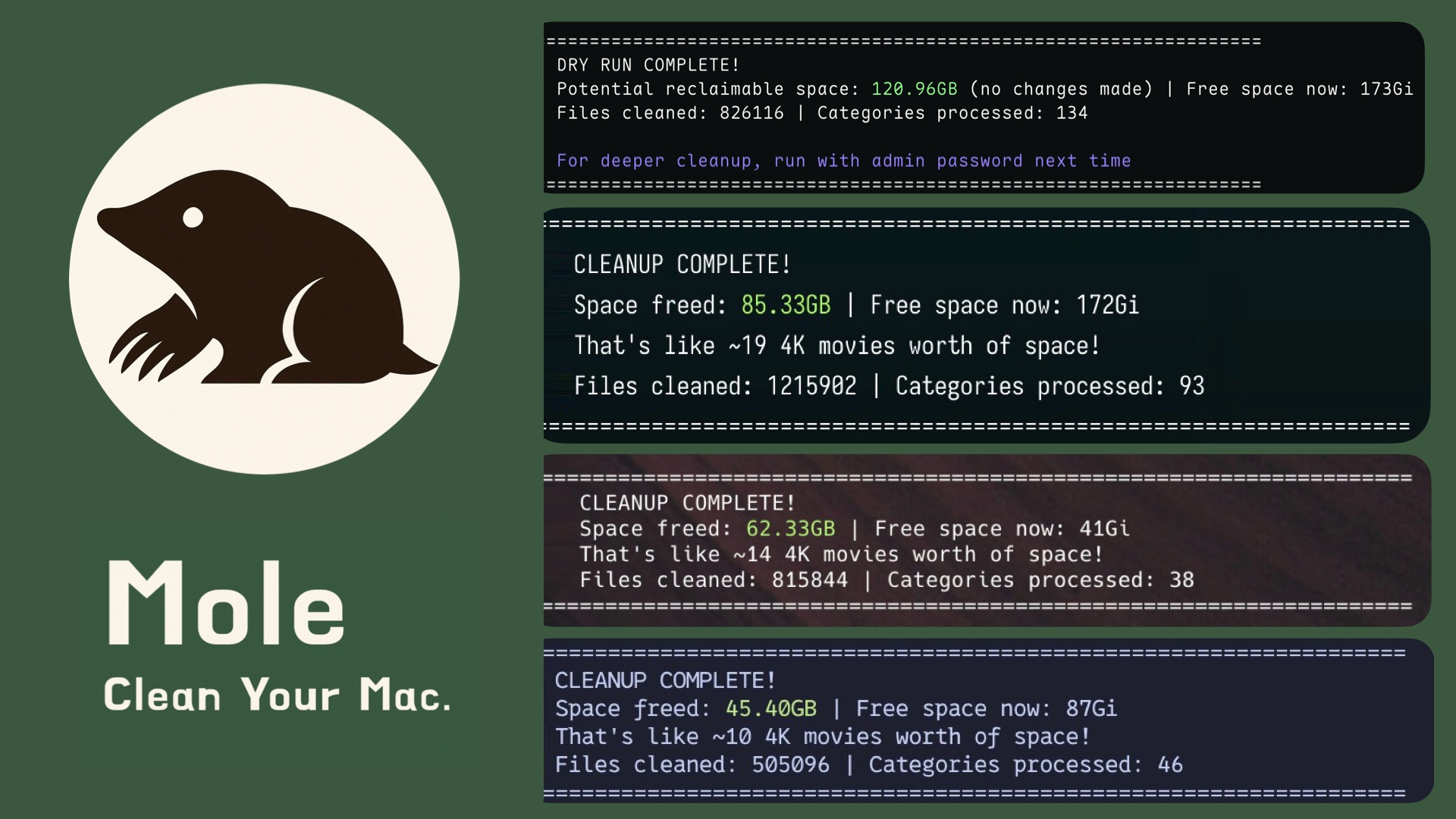

-Deep clean and optimize your Mac.

-

-  -

-

-

-## Support

-

-- If Mole saved you disk space, consider starring the repo or [sharing it](https://twitter.com/intent/tweet?url=https://github.com/tw93/Mole&text=Mole%20-%20Deep%20clean%20and%20optimize%20your%20Mac.) with friends.

-- Have ideas or fixes? Check our [Contributing Guide](CONTRIBUTING.md), then open an issue or PR to help shape Mole's future.

-- Love Mole? Buy Tw93 an ice-cold Coke to keep the project alive and kicking! 🥤

-

-

-

-## Support

-

-- If Mole saved you disk space, consider starring the repo or [sharing it](https://twitter.com/intent/tweet?url=https://github.com/tw93/Mole&text=Mole%20-%20Deep%20clean%20and%20optimize%20your%20Mac.) with friends.

-- Have ideas or fixes? Check our [Contributing Guide](CONTRIBUTING.md), then open an issue or PR to help shape Mole's future.

-- Love Mole? Buy Tw93 an ice-cold Coke to keep the project alive and kicking! 🥤

-

-